by K. Richard, August 20, 2020 in NoTricksZone

Clouds dominate as the driver of changes in the Earth’s radiation budget and climate. A comprehensive new analysis suggests we’re so uncertain about cloud processes and how they affect climate we can’t even quantify our uncertainty.

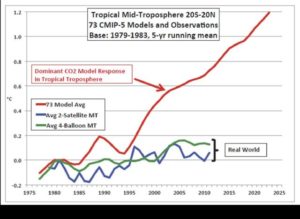

According to scientists (Song et al., 2016), the total net forcing for Earth’s oceanic atmospheric greenhouse effect (Gaa) during 1992-2014 amounted to -0.04 W/m² per year. In other words, the trend in total longwave forcing had a net negative (cooling) influence during those 22 years despite a 42 ppm increase in CO2. This was primarily due to the downward trend in cloud cover that overwhelmed or “offset” the longwave influence from CO2.

…

Cloud impacts on climate are profound – but so are uncertainties

The influence of clouds profoundly affects Earth’s radiation budget, easily overwhelming CO2’s impact within the greeenhouse effect. This has been acknowledged by scientists for decades.

Despite the magnitude of clouds’ radiative impact on climate, scientists have also pointed out that our limited capacity to observe or measure cloud effects necessarily results in massive uncertainties.

For example, Stephens et al. (2012) estimated the uncertainty in Earth’s annual longwave surface fluxes is ±9 W/m² (~18 W/m²) primarily due to the uncertainties associated with cloud longwave radiation impacts.

…