by Pat Frank, March 1, 2020 in WUWT

Last February 7, statistician Richard Booth, Ph.D. (hereinafter, Rich) posted a very long critique titled, What do you mean by “mean”: an essay on black boxes, emulators, and uncertainty” which is very critical of the GCM air temperature projection emulator in my paper. He was also very critical of the notion of predictive uncertainty itself.

This post critically assesses his criticism.

An aside before the main topic. In his critique, Rich made many of the same mistakes in physical error analysis as do climate modelers. I have described the incompetence of that guild at WUWT here and here.

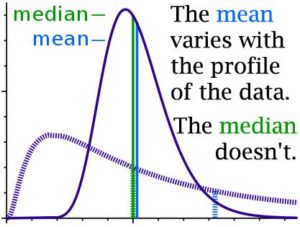

Rich and climate modelers both describe the probability distribution of the output of a model of unknown physical competence and accuracy, as being identical to physical error and predictive reliability.

Their view is wrong.

Unknown physical competence and accuracy describes the current state of climate models (at least until recently. See also Anagnostopoulos, et al. (2010), Lindzen & Choi (2011), Zanchettin, et al., (2017), and Loehle, (2018)).

GCM climate hindcasts are not tests of accuracy, because GCMs are tuned to reproduce hindcast targets. For example, here, here, and here. Tests of GCMs against a past climate that they were tuned to reproduce is no indication of physical competence.

When a model is of unknown competence in physical accuracy, the statistical dispersion of its projective output cannot be a measure of physical error or of predictive reliability.

Ignorance of this problem entails the very basic scientific mistake that climate modelers evidently strongly embrace and that appears repeatedly in Rich’s essay. It reduces both contemporary climate modeling and Rich’s essay to scientific vacancy.

The correspondence of Rich’s work with that of climate modelers reiterates something I realized after much immersion in published climatology literature — that climate modeling is an exercise in statistical speculation. Papers on climate modeling are almost entirely statistical conjectures. Climate modeling plays with physical parameters but is not a branch of physics.

I believe this circumstance refutes the American Statistical Society’s statement that more statisticians should enter climatology. Climatology doesn’t need more statisticians because it already has far too many: the climate modelers who pretend at science. Consensus climatologists play at scienceness and can’t discern the difference between that and the real thing.

Climatology needs more scientists. Evidence suggests many of the good ones previously resident have been caused to flee.

…