by Li, F. et al. July 31, 2020 in Front. Earth.Sci.

As a toxic and harmful global pollutant, mercury enters the environment through natural sources, and human activities. Based on large numbers of previous studies, this paper summarized the characteristics of mercury deposition and the impacts of climate change and human activities on mercury deposition from a global perspective. The results indicated that global mercury deposition changed synchronously, with more accumulation during the glacial period and less accumulation during the interglacial period. Mercury deposition fluctuated greatly during the Early Holocene but was stable and low during the Mid-Holocene. During the Late Holocene, mercury deposition reached the highest value. An increase in precipitation promotes a rise in forest litterfall Hg deposition. Nevertheless, there is a paucity of research on the mechanisms of mercury deposition affected by long-term humidity changes. Mercury accumulation was relatively low before the Industrial Revolution ca. 1840, while after industrialization, intensive industrial activities produced large amounts of anthropogenic mercury emissions and the accumulation increased rapidly. Since the 1970s, the center of global mercury production has gradually shifted from Europe and North America to Asia. On the scale of hundreds of thousands of years, mercury accumulation was greater in cold periods and less in warm periods, reflecting exogenous dust inputs. On millennial timescales, the correspondence between mercury deposition and temperature is less significant, as the former is more closely related to volcanic eruption and human activities. However, there remains significant uncertainties such as non-uniform distribution of research sites, lack of mercury deposition reconstruction with a long timescale and sub-century resolution, and the unclear relationship between precipitation change and mercury accumulation.

…

by K. Richard, August 3, 2020 in NoTricksZone

A new study finds that 26 to 19 thousand years ago, with CO2 concentrations as low as 180 ppm, fire activity was an order of magnitude more prevalent than today near the southern tip of Africa – mostly because summer temperatures were 3-4°C warmer.

We usually assume the last glacial maximum – the peak of the last ice age – was signficantly colder than it is today.

But evidence has been uncovered that wild horses fed on exposed grass year-round in the Arctic, Alaska’s North Slope, about 20,000 to 17,000 years ago, when CO2 concentrations were at their lowest and yet “summer temperatures were higher here than they are today” (Kuzmina et al., 2019). Horses had a “substantial dietary volume” of dried grasses year-round, even in winter at this time, but the Arctic is presently “no place for horses” because there is too little for them to eat, and the food there is to eat is “deeply buried by snow” (Guthrie and Stoker, 1990).

In a new study (Kraaij et al., 2020) find evidence that “the number of days per annum with high or higher fire danger scores was almost an order of magnitude larger during the LGM [last glacial maximum, 19-26 ka BP] than under contemporary conditions” near Africa’s southernmost tip, and that “daily maximum temperatures were 3-4°C higher than present in summer (and 2-4°C lower than present in winter), which would have contributed to the high severity of fire weather during LGM summers.”

Neither conclusion – that surface temperatures would be warmer or that fires would be more common – would seem to be consistent with the position that CO2 variations drive climate or heavily contribute to fire patterns.

by Roy Spencer, August 4, 2020 in WUWT

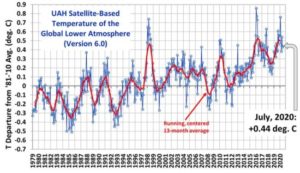

The Version 6.0 global average lower tropospheric temperature (LT) anomaly for July, 2020 was +0.44 deg. C, essentially unchanged from the June, 2020 value of +0.43 deg. C

The linear warming trend since January, 1979 remains at +0.14 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).

La géologie, une science plus que passionnante … et diverse